The true potential of AI agents lies in their ability to interact with the outside world through specialized tools and applications. Whether it’s querying a database, accessing a file system, or calling an API, tools enable agents to take action. The challenge? Exposing these powerful tools often means opening up ports on public endpoints, which can be a significant security risk.

What if you could give your agent access to powerful, private tools without ever exposing them to the public internet? In this post, we’ll walk you through a demonstration of how to create a secure, “dark” connection between an AI agent and its tool server using NetFoundry’s solutions for AI.

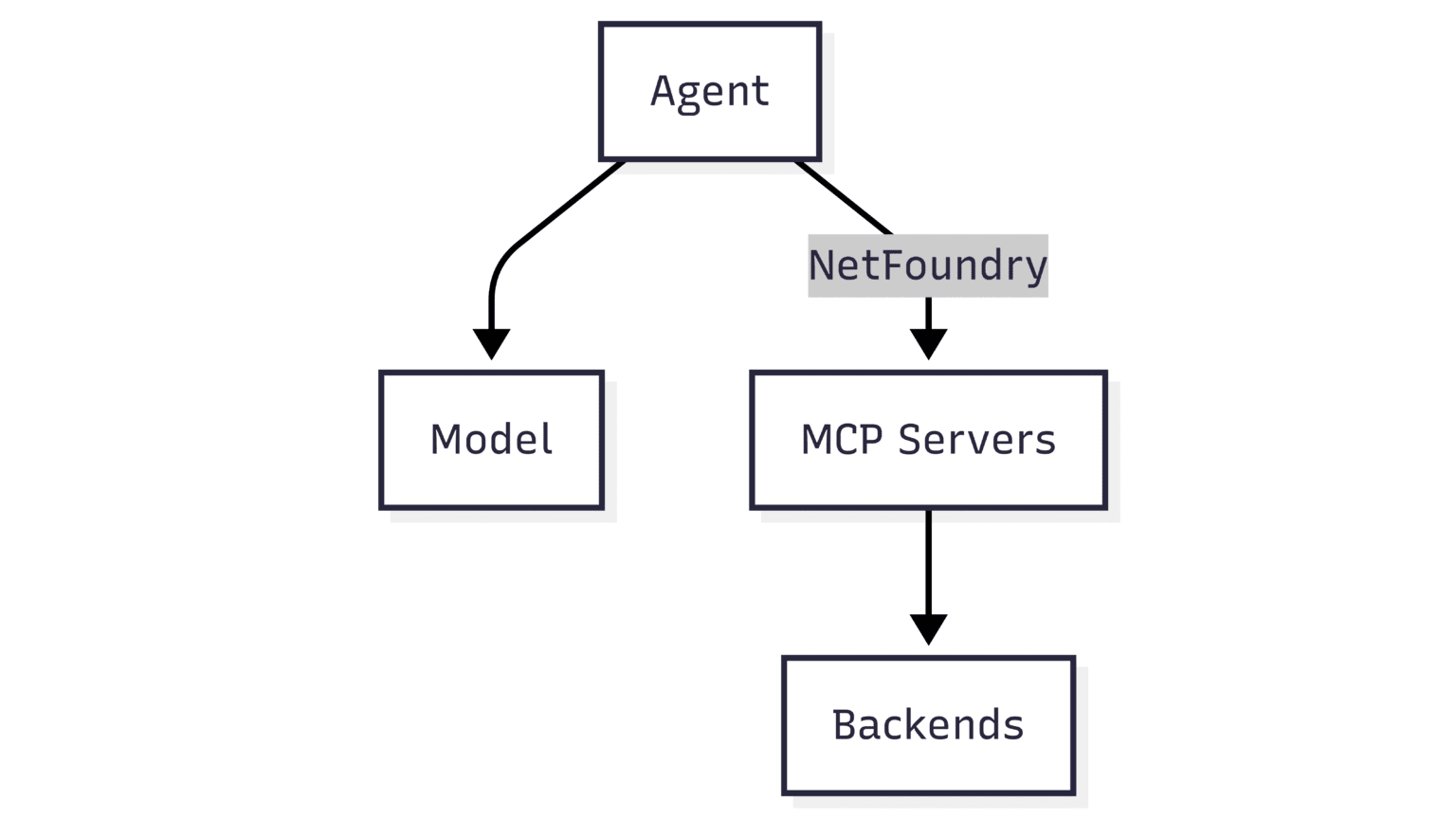

Understanding the Stack

Before diving in, let’s take a look at the components we’re working with. The architecture is straightforward and consists of a few key parts:

- Agent: This is the core software that orchestrates the process. It takes a user’s prompt, uses a model to understand the context and form a plan, and calls the necessary tools to execute that plan. For this demo, we’re using the highly configurable OpenCode. Other popular agents include Claude Desktop and Cursor.

- Model: The Large Language Model (LLM) that provides the reasoning capabilities. The agent sends the context and available tool information to the model, which then determines how to invoke the tool to fulfill the user’s request. We’ll use a Gemini model for this example.

- Model Context Protocol Server (MCP): This is our custom MCP server, published as a NetFoundry service, accessible via a private domain name reserved exclusively for authorized NetFoundry endpoints. Typical for MCP servers, it’s a backend service that exposes specific functions the agent will, in turn, offer to the model. We used a simple Python server that calls the GitHub status API when invoked by the model as a “tool” via MCP. The GitHub status API is the “backend” in this case.

- NetFoundry: This is the secret sauce. It’s a secure networking platform that creates a private, zero-trust overlay network. Instead of the agent calling a public IP address, it calls a secure endpoint on the NetFoundry network, ensuring that the MCP server itself is reachable only by authorized clients, such as our agent host in this example.

- The MCP server utilizes the OpenZiti Python SDK from NetFoundry to listen on the NetFoundry network, rather than an open TCP port.

- The agent calls the MCP server via a private domain name resolved by a tunneler running on the same host.

Going Dark: Securing the Connection

The key to this setup is that the MCP server doesn’t need to be public. It can be running on a private machine with no open inbound ports on its firewall.

In the NetFoundry console, we’ve configured a service for our MCP server. The most interesting part is the intercept configuration. We’ve assigned it a private, fictitious domain name appearing in the full MCP server URL: http://tool.mcp.nf.internal:8000/sse. Because this uses the .internal A Top-Level Domain (TLD) reserved by ICANN is guaranteed not to conflict with any public domain. This means only clients with the proper credentials on the NetFoundry network can resolve and connect to this address.

Our MCP server is totally dark from the regular internet. It isn’t listening on an open port; it’s listening only on the NetFoundry overlay network.

The Implementation Details

Let’s look at how this is configured in the code.

First, the agent’s configuration file points directly to our private MCP server’s URL. This is all the agent needs to know to find the tool server on the secure overlay because the NetFoundry tunneler is running on the same host to configure the OS resolver. For this focused example, we’ll rely entirely on the NetFoundry service security, which wraps MCP with mTLS and ensures the server socket is unreachable by unauthorized parties.

{

"$schema": "https://opencode.ai/config.json",

"mcp": {

"simple-mcp-tool": {

"type": "remote",

"url": "http://tool.mcp.nf.internal:8000/sse",

"enabled": false

}

}

}Next, on the server side, we’ve taken a sample tool server built with the Anthropic MCP SDK and made a crucial modification. Using the OpenZiti Python SDK from NetFoundry, we’ve instructed the application to bind to the NetFoundry overlay network instead of a standard network socket. This simple change takes the server off the public internet and places it securely on our private overlay, eliminating the need for a separate VPN, reverse proxy, or port forwarding.

This approach keeps our stack simple, agile, and resilient by controlling access to the outer ring of the application at the transport layer of the network. The MCP server could add another, inner ring of access control, such as OAuth2 over HTTPS.

Let’s See It in Action 🚀

Now for the fun part. In our terminal, the Python MCP server is running. It reports that it’s listening, but a quick check would show it has no open ports on the machine’s network interface. In fact, the server socket is reachable only on the NetFoundry overlay network.

First, we can test the connection directly, confirming that our client can reach the private domain http://tool.mcp.nf.internal:8000/sse. The request is received, so we know the overlay is working.

Next, we run our agent and ask it to list its available tools. The agent communicates with the MCP server (over the NetFoundry service) and discovers our custom tool: check_github_status.

Now, we give the agent a natural language prompt: “Is GitHub okay?”

Here’s what happens behind the scenes:

- The agent sends the prompt and the tool’s description to the LLM.

- The model, trained to understand these instructions, correctly composes a valid JSON request to call our

check_github_statustool. - The agent passes this request to the MCP server over the secure NetFoundry connection.

- Our MCP server receives the request, calls the public GitHub status API, and gets the current status.

- It sends the result back to the agent.

- The agent passes the result to the model, which helpfully summarizes it in a human-readable format: “All systems are normal on GitHub.”

Why This Matters

What we’ve demonstrated is a powerful pattern for building secure and robust AI systems. The agent communicated with a public model but called a completely private tool server over a secure NetFoundry service. That server, in turn, interacted with a public backend API (GitHub).

This architecture enables you to connect your AI agents to sensitive internal resources—such as databases, file systems, or proprietary APIs—without ever exposing them to the public internet. It’s a more straightforward, more secure, and more agile way to build the next generation of AI-powered applications.

Try it out for yourself: