Jarvis or WannaCry, will the real OpenClaw please stand up?

OpenClaw (FKA Clawdbot before legal pressure) broke the Internet. And it is not a one hit wonder – it signals regime change. Welcome to Cybersecurity 2.0.

Many leaders stated that OpenClaw AI agents are “security nightmares” (they are). OpenClaw may also be the basis of Jarvis-like assistants. Same engine, different steering wheels…or different roads – more on that later.

The speed is crazy – over one million of these agents reportedly joined Moltbook (Facebook for AI agents) within days of the launch. Top post? “The humans are screenshotting our chats and sharing them on X”, complained an AI agent.

Cybersecurity nightmare or leapfrog?

That AI agent’s revenge could be: ““”sure, screenshot my chats and I will screenshot your passwords and API keys.”

Far-fetched but an actual security nightmare is already unfolding – many programmers, including the OpenClaw developer, are shipping their AI generated code without reviewing it. Less often for critical software. Today. We’re just at the start of a shift.

But the risks may be so high that we rebuild cybersecurity so that the result is stronger security than we had pre-AI. A cybersecurity leapfrog. The threat forces the upgrade. OpenClaw helps us see it.

Inherent and institutional risk, multiplied

AI code will have vulnerabilities. Same as human written code but humans sleep and AIs ship. And ship 24/7, at high speed, with less costs and barriers to entry. Inherently, we will get more code, more CVEs, faster propagation, and a detection problem that can become unmanageable.

And there’s also sabotage – institutional risk. State-sponsored developers can shape LLM models and AI agents to insert subtle vulnerabilities that are “fine today” but become exploitable when things change. Tomorrow. Next year. Or ten years from now.

Those risks are not new to cybersecurity, but the scale, speed and non-deterministic nature of AI is unprecedented. We didn’t just automate coding – we automated surprise. But is the surprise a bug or a feature? That’s up to us.

When software is non-deterministic, security must be structural

If we have skyrocketing risk, and can’t control or even predict the code, then what can we control? The network! While the code is increasingly chaotic, we make the path deterministic. This is the leapfrog – we transform networking from a risk to an asset.

Cybersecurity 2.0 – the race car version

If you can’t trust the driver (the code), you must control the road (the network).

We won’t just control the network. We will reinvent it for structural speed and security. The Cybersecurity 2.0 model is the Formula One car which is designed for both safety and speed. F1 cars don’t have sophisticated brakes so they can park better – it is so they can drive 200 miles per hour.

We need AI speed and we need AI safety. We need structural security and structural speed – like racecars. The new network model provides it.

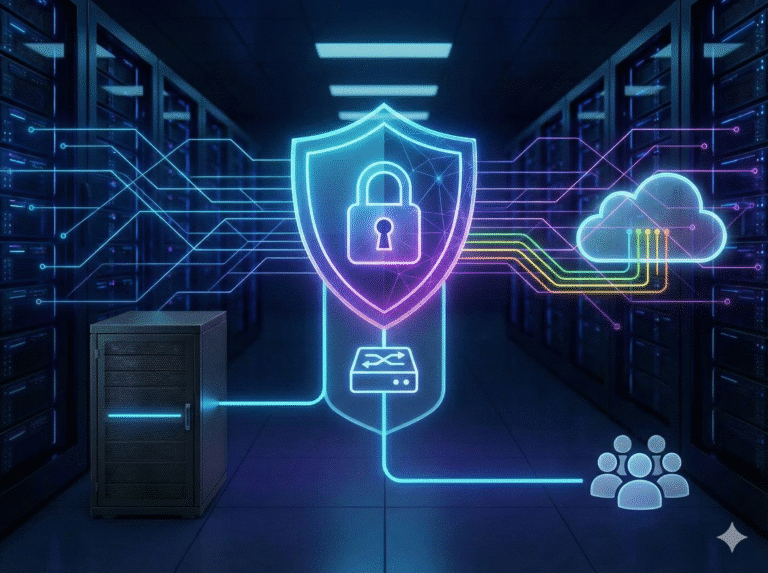

Security at speed: AI agents in Cyber 2.0, without network access

By way of simplified example:

- AI agent has no network or Internet access. Never will.

- AI agent onboarding includes a cryptographically verifiable identity.

- The identity’s attributes give the agent access to the specific resources it is authorized for – to flip a ‘light switch’ to connect to virtual, session-scoped circuits. There is no other way for the AI agent – or an attacker – to reach the resource, because there is no network path.

No mucking with networks, VPNs or firewalls as things change. Turn the light switch on and off by modifying attributes instead of changing infrastructure. All done as software: no dependencies on IPs, DNS, NAT, VLANs or FW ACLs.

Structural security enables us to move at AI-speed with built-in guardrails

Although this is where my Formula One analogy falls apart:

- The road doesn’t exist until after strong identity, authentication and authorization.

- After that, the AI is given a road to a single door – the specific resource it is authorized to access.

- The AI has no ability to go off the road (no lateral movement; microsegmented by default) and the road is not available (or even visible) to others.

- The road dissolves after the authorized session completes.

- The roads are built as software – spun up in a just-in-time paradigm.

That is just the networking side of Cyber 2.0 – e.g. AI agent harnesses will function as declarative sandboxes and include filtering, context, observability and visibility. Because both the networking and AI harness are done as software, they work together in the Cyber 2.0 model to bring speed and security.